Open-source AI wrappers have made life a lot easier for developers eager to speed up AI development. But they also bring some pretty unique security challenges along with them. These tools often handle sensitive info, acting as a bridge between users and powerful AI models, which really makes securing them a top priority.

Ignoring these security issues could lead to serious financial losses. It can even harm one’s reputation and even bring legal issues. In this blog post, we’ll look at some of the primary risks connected to open-source AI wrappers and go over some doable solutions to protect them.

Key Risks and Vulnerabilities in Open-Source AI Wrappers:

What are open-source AI wrappers? Well, they are essentially software layers that make it easy to interact with complex AI models, like large language models (LLMs). Now these wrappers are publicly accessible, which encourages community collaboration and bug fixes. However, this public accessibility could also be a threat to security. It could lead to potential misuse.

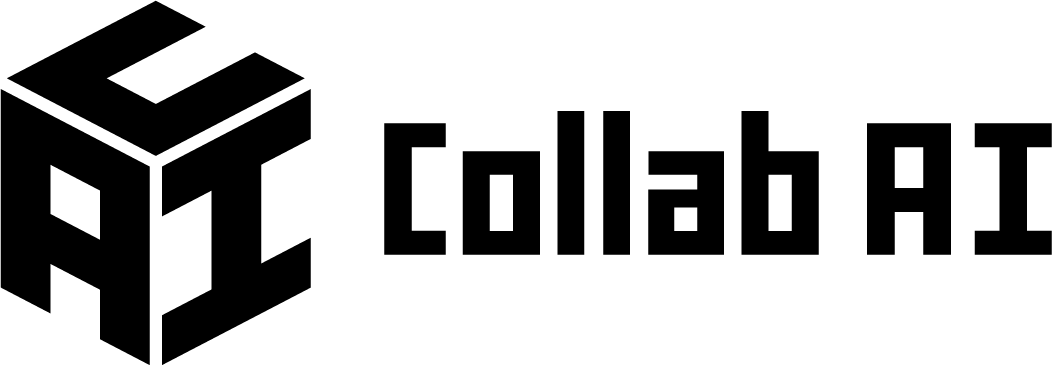

Here are some key risks to keep an eye on:

- Dependencies at Risk: These wrappers often rely on a whole bunch of third-party libraries, and some of these might have hidden flaws. If someone figures out how to exploit those defects, it could end up making the entire wrapper completely unusable.

- Injection Attacks: If the input data isn’t cleaned up properly, custom AI wrappers can easily become targets for injection attacks, like SQL or command injections. Attackers could then run their own code or get sensitive info.

- Authentication and Authorization Issues: Are passwords weak? Or API keys aren’t managed properly? May be access controls are all over the place? If yes, unauthorized people could easily sneak into the AI model or the system that supports it. That’s definitely a recipe for disaster!

- Data Exposure: Failing to handle data correctly, like storing sensitive info in plain text or skipping encryption during transfer, can result in data leaks and privacy breaches.

- Legacy Code: Older code with known vulnerabilities can be found in many open-source projects. These components must be kept patched and updated so that you can avoid exploitation.

Impact of Vulnerabilities: Real-World Scenarios

Although tech professionals are now realizing “AI wrapper” breaches, the underlying problems trace back to open-source and AI security issues.

For example, the XZ Utils backdoor incident highlights how attackers can exploit commonly used open-source elements, even if it doesn’t involve an AI wrapper.

Similarly, vulnerabilities found in tools like PyTorch TorchServe (CVE-2023-43654) and TensorFlow/Keras show how flaws in AI serving can lead to serious issues, like remote code execution and data compromise.

Best Practices for Securing Open-Source AI Wrappers

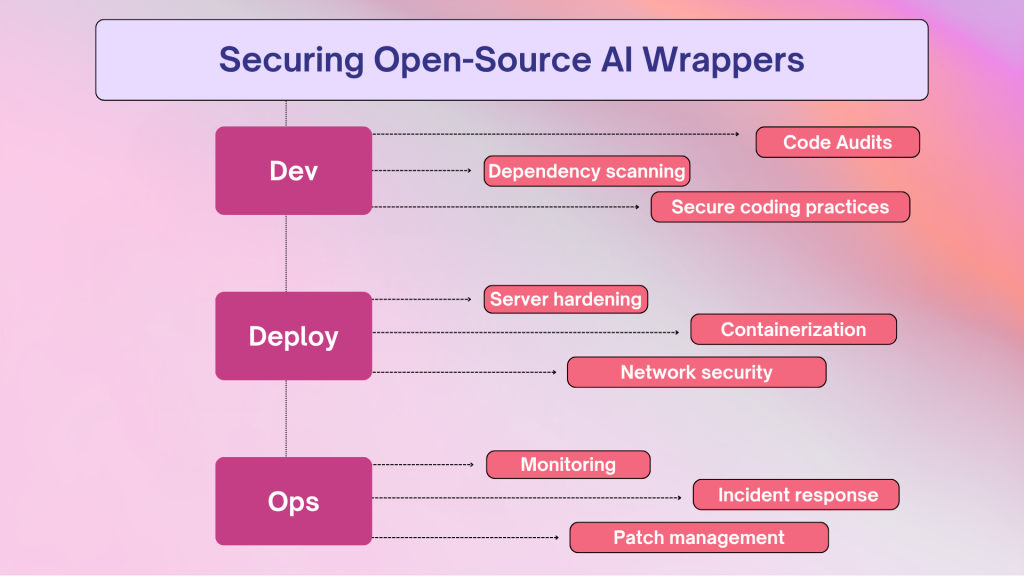

To keep open-source AI wrappers safe, you really want to take a layered approach. It involves addressing the phases of development, deployment, and operation. Here is a quick guide to some good habits you can adopt:

Vulnerability management and code quality:

- Frequent audits of the code: Make it a habit to review your code frequently. This ensures that you’re adhering to security standards and helps you identify possible issues early.

- Automated Security Scanning Tools: Use tools that automatically look for common vulnerabilities and risky dependencies. It’s a good idea to add these scanners into your continuous integration and delivery (CI/CD) pipeline.

Dependency Management:

- Dependency Scanning: Keep an eye on your project’s dependencies. Regularly check for any known vulnerabilities using tools like OWASP Dependency-Check or Snyk to catch issues before they become a problem.

- Principle of Least Privilege: Only include the dependencies you truly need. The fewer packages you have, the smaller your risk exposure.

Access Control and Authentication:

- Robust Authentication: Use strong authentication methods, like multi-factor authentication (MFA), to enhance security.

- Role-Based Access Control (RBAC): Set up RBAC so only the right people have access to sensitive resources. This helps prevent unauthorized access.

- Secure API Keys and Secrets: Avoid hardcoding API keys straight into your code. Instead, store them safely using environment variables or secrets management tools.

Data Protection Strategies:

- Encryption: Always encrypt sensitive data while it’s being transferred (using TLS/SSL) and when it’s stored. This adds a layer of protection.

- Data Sanitization and Validation: Make sure to clean and validate all input data thoroughly. This is crucial to prevent nasty injection attacks.

Update and Patch Management:

- Patch Management Workflow: Set up a clear process for applying updates and security patches to both your dependencies and the wrapper itself.

- Stay Updated: Keep yourself in the loop by following security advisories and community updates regarding the latest vulnerabilities.

Secure Deployment and Environment Configuration:

- Server Hardening: Strengthen the servers that host your AI wrapper by disabling unnecessary services and implementing solid security settings.

- Containerization and Sandboxing: Use tools like Docker to keep the wrapper separate from the rest of your system. This limits damage if something goes wrong.

- Network Security: Install firewalls and intrusion detection systems to protect the wrapper from outside attacks.

Monitoring and Incident Response:

- Security Monitoring: Set up systems to keep an eye out for any suspicious activities or potential security incidents.

- Incident Response Plan: Have a go-to plan in place so you can react quickly and effectively if a security breach occurs.

By implementing these practices, you can help ensure your open-source AI wrappers stay secure and resilient against threats.

Community Collaboration: A Shared Responsibility

Keeping open-source AI platforms secure isn’t just up to one person; it’s a team effort. By getting involved in the open-source community, reporting any vulnerabilities, and helping out with security improvements, we can all boost the safety of these important tools. Use community resources, join security forums, and connect with vendors to make sure your AI wrappers are as secure as they can be. It takes the combined efforts of developers, security pros, and the open-source community to protect the future of AI applications together.